LangChain Integration

This guide explains how to integrate your Gateway-generated API with LangChain, allowing you to create AI agents that can interact with your data through the API.

Prerequisites

Before integrating with LangChain, ensure you have:

- A running Gateway API (either local or hosted)

- Python 3.8+ installed

- OpenAI API key or another compatible LLM provider

Installing Required Packages

First, install the necessary Python packages:

# Install LangChain and related packagespip install langchain langchain-openai langchain-community requestsIntegration Example

Here’s a complete example of how to integrate a Gateway API with LangChain using the OpenAPI specification:

import osimport requestsfrom langchain_community.agent_toolkits.openapi.toolkit import OpenAPIToolkitfrom langchain_openai import ChatOpenAIfrom langchain_community.utilities.requests import RequestsWrapperfrom langchain_community.tools.json.tool import JsonSpecfrom langchain.agents import initialize_agent

# Define API detailsAPI_SPEC_URL = "https://dev1.centralmind.ai/openapi.json" # Replace with your API's OpenAPI spec URLBASE_API_URL = "https://dev1.centralmind.ai" # Replace with your API's base URL

# Set OpenAI API keyos.environ["OPENAI_API_KEY"] = "your-openai-api-key" # Replace with your actual API key

# Load and parse OpenAPI specificationapi_spec = requests.get(API_SPEC_URL).json()json_spec = JsonSpec(dict_=api_spec)

# Initialize components# You can use X-API-KEY header to set authentication using API keysllm = ChatOpenAI(model_name="gpt-4", temperature=0.0)toolkit = OpenAPIToolkit.from_llm(llm, json_spec, RequestsWrapper(headers=None), allow_dangerous_requests=True)

# Set up the agentagent = initialize_agent(toolkit.get_tools(), llm, agent="zero-shot-react-description", verbose=True)

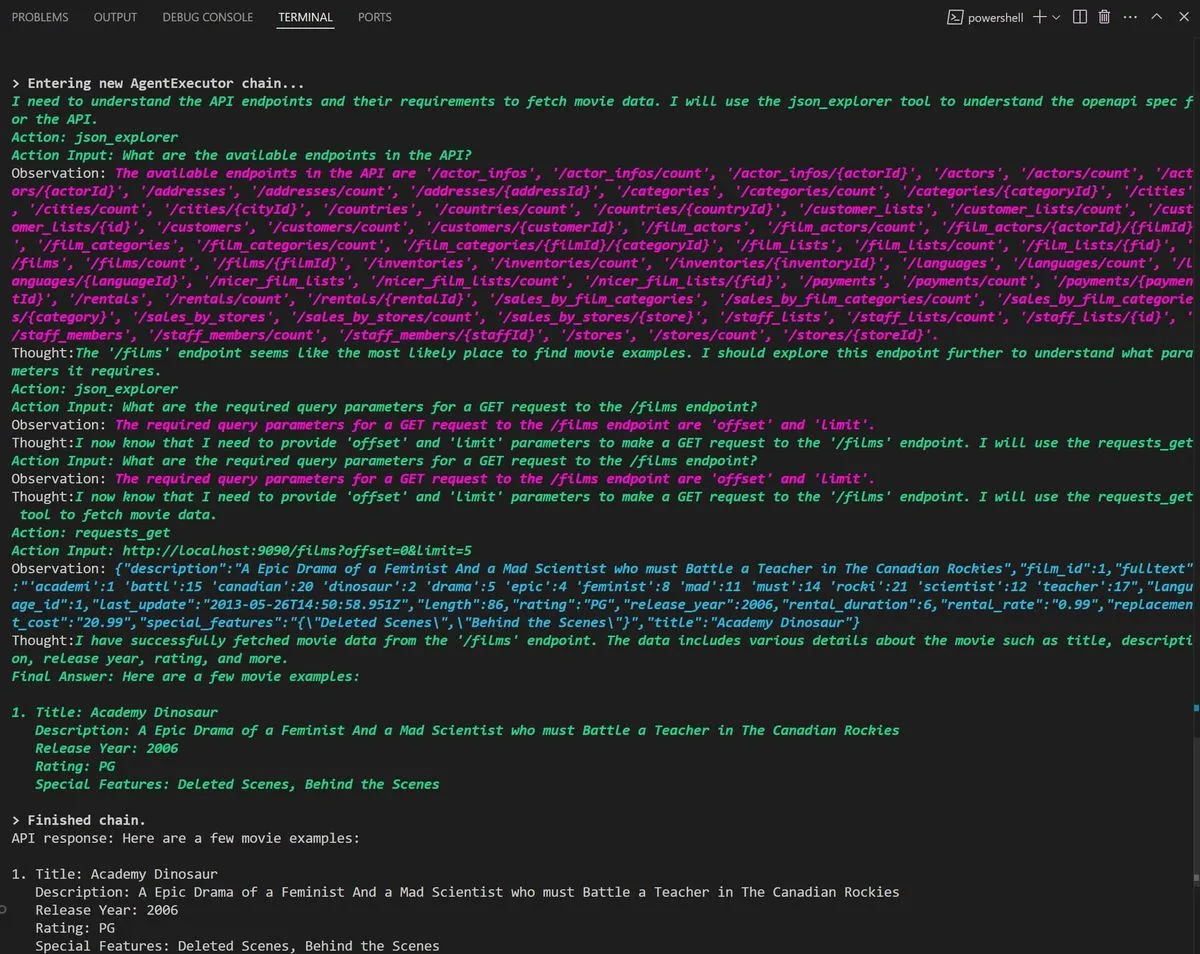

# Make a requestresult = agent.run(f"Find specs and pricing for instance c6g.large, use {BASE_API_URL}")print("API response:", result)As a result you should see a chain of thougth like this:

Code Explanation

Let’s break down the integration code:

1. API Configuration

API_SPEC_URL = "https://dev1.centralmind.ai/openapi.json"BASE_API_URL = "https://dev1.centralmind.ai"API_SPEC_URL: URL to your Gateway API’s OpenAPI specification (Swagger JSON)BASE_API_URL: Base URL of your Gateway API

2. Load API Specification

api_spec = requests.get(API_SPEC_URL).json()json_spec = JsonSpec(dict_=api_spec)This fetches the OpenAPI specification and converts it to a format LangChain can use.

3. Initialize LangChain Components

llm = ChatOpenAI(model_name="gpt-4", temperature=0.0)toolkit = OpenAPIToolkit.from_llm(llm, json_spec, RequestsWrapper(headers=None), allow_dangerous_requests=True)- Creates a ChatOpenAI instance using GPT-4

- Initializes the OpenAPIToolkit with the API specification

4. Set Up and Run the Agent

agent = initialize_agent(toolkit.get_tools(), llm, agent="zero-shot-react-description", verbose=True)result = agent.run("Find specs and pricing for instance c6g.large")- Creates an agent with the API tools

- Runs the agent with a natural language query

Authentication Options

If your API requires authentication, you can add headers to the RequestsWrapper:

# Example with API key authenticationheaders = { "X-API-KEY": "your-api-key"}toolkit = OpenAPIToolkit.from_llm(llm, json_spec, RequestsWrapper(headers=headers), allow_dangerous_requests=True)Advanced Configuration

For more complex scenarios, you can:

- Add custom tools: Combine Gateway API tools with other LangChain tools

- Use different agent types: Try structured or conversational agents

- Customize prompts: Create specialized instructions for the agent

Troubleshooting

If you encounter issues:

- Verify your API is running and accessible

- Check that the OpenAPI spec URL returns a valid JSON document

- Ensure your LLM API key is valid and has sufficient credits

- Set

verbose=Truein the agent initialization to see detailed reasoning

For more detailed information on LangChain’s OpenAPI integration, refer to the LangChain Documentation.